“Good Data”

When it comes to appropriate data management, identifying the properties of what constitutes good data is the first step. The following concepts associated with data quality are particularly relevant in a school context:

Accessible

In schools, ensuring that data is only a few clicks away goes hand-in-hand with implementing the correct access permissions throughout all of the systems. Links between data also need to be established to support accessibility. For example, Parents / Guardians must be linked to their children, staff members need a broad ability to look up the students they teach, and administrators need high-level overviews of data.

Precise

High-quality data should provide enough information to be objectively correct. For example, a phone number needs a country code; an address and postal code. Similarly, forms need to be completely filled out to be useful.

Valid

Web forms are effective in ensuring that data matches the required format as it is entered. However, some applications, such as spreadsheets, can be more lenient and, thus, may require a post-data-entry step to double-check or cross-reference for validity.

Complete

Ensuring data is filled out can be accomplished by identifying the required fields that are mission-critical to the data point. In addition, there may be cases where optional fields need to satisfy specific cases. For example, not every student will have a medical alert, but when present, it needs to equip Teachers with specifics.

Timely

The collection of data needs to be efficiently scheduled to happen at a time when users are able to furnish it. Generally, users are happy to provide data once at the right time, but if they have to re-enter the same data throughout the year, it can erode quality data.

Consistent

The data in one system needs to match the data in another via syncing operations. In particular, contradictions need to be mitigated. They also need to be synchronised in a window of time that is acceptable, given the nature of the data.

Trustworthy

The data in the systems in the school infrastructure must act as the source of truth. Staff members may have copies of the information handy for their own purposes, but if inconsistency arises, users need to remember that it is the system that is the reference point. Ideally, the need for private copies is minimal.

Observations that help to understand how to maintain “good data”

With our definition of good data established, the second step is to identify how to strive for this. We’ll focus specifically on what this involves in terms of the processes associated with account management of students, teachers, and parents.

While there have been great advances in information systems pertaining to data storage recently, there remain several core principles that apply no matter which platform, database engine, or format the data takes.

The following observations provide a mental model for how to maintain high-quality data:

Data is consumed by both humans and computers

Data is consumed by humans when interacting with an interface and consumed by computers when stored or transmitted. Converting data from a human-readable form into computer-readable form is a common and well-understood process called serialisation.

When a user is interacting with software, the data is structured for the ease of the person to understand it. When it’s time to lay the data into storage or other digital medium, it must be serialised, or “flattened.”

Such flattened data is linked with permanent IDs and their associated fields. For example, a Student, Parent, and Teacher will have an ID that is just a sequence of digits or perhaps a string of characters, called a hash. This ID ties the data to the individual. It is a permanent ID used internally within that system.

Example from OpenApply

In order for the school to be able to associate the same person across many systems, an extra field is made available that can be filled in by the school. This is also sometimes called the “school ID” or “Student ID”. Its value can be determined entirely by the operator and is intended to link individuals across systems, where each respective system will have its own permanent IDs for individuals.

OpenApply uses this concept, and like many systems, has its own nomenclature. On a student’s profile page, for example, the “internal” ID is displayed as “Applicant ID” and “Student ID”.

Computers need permanent IDs; we need “monikers”

While the permanent ID is required for computers to do their jobs, humans also need an ID system to identify people or resources. School often call this kind of ID a “School ID” or some other localized acronym such as “ABC ID”. On some platforms, this ID is known as the “Local ID” that is unique across a school. Using the word “ID” to refer to both human-friendly and system IDs can lead to confusion, and so instead the use of a moniker is explained here.

For example, a room may be assigned a moniker of 3-02, indicating it’s on the third floor, while having an ID of 5482259. We prefer using handles that reveal something about identity as in the former, whereas computers just need an identifier to be unique, and never ever change. For computers, changing the ID is like changing the entity, akin to the absurdity of changing a passport ID. The only job of a permanent ID is to be permanent.

However, people who work in a community also need a way to identify things, and be able to do so in a digestible way. A community will also have a variety of systems and platforms which need quality data management. This is why it is so efficacious to associate or map these community-established monikers to the permanent IDs. In addition to solving a technical hurdle, it also affords operators the ability to create a moniker that makes sense to teachers and administrators.

Storing data onto a database is very similar to a spreadsheet

When you click “Save” or “Update” on a website form, the platform writes the data into some kind of flat structure – usually to a relational database.

A relational database can be thought of as a spreadsheet with many tabs, where each tab represents all the entities that the database tracks: Rooms, Books, Students, Teachers, Parents. Each spreadsheet tab stores data in fields – typically identified by the column headers. Each individual or item is represented by a row entry.

The key difference between databases and spreadsheets is similar to the difference between permanent IDs and monikers: the former is intended to be consumed by computers; the latter by humans.

Databases are far more reliable and, thus, able to achieve clean and accurate data, widely available to whoever needs it.

Maintaining data across systems is complicated by how data is stored

This is best illustrated by an example.

Suppose there are two systems, one which has a student S123 in Platform A and a student S123 in Platform B. Notice that they have different IDs in each respective platform but share the same moniker:

|

Platform A |

Platform B |

|||||||

|

ID |

Moniker |

Phone # |

ID |

Moniker |

Phone Numbers |

|||

|

Code |

Area |

Base |

Type |

|||||

|

631 |

S123 |

+01 555-1111 |

519 |

S123 |

1 |

555 |

1111 |

Mobile |

|

1 |

555 |

2222 |

Home |

|||||

|

632 |

S345 |

+01 555-9999 |

520 |

S345 |

1 |

555 |

9999 |

Home |

Whereas these platforms can be linked through the use of the moniker, there is the additional complication that the phone number in Platform B is stored with wholly different assumptions about the data point:

|

Assumptions by Platform A |

Assumptions by Platform B |

|

Only need one phone number |

There can be multiple phone numbers |

|

No need to label a phone number by category or tags |

Phone numbers have two tags: home or mobile |

|

The phone number can be represented with +00 000-0000 format |

The phone number is divided into sub-fields |

So, if a parent’s phone number in Platform A changes (and Platform A is the source of truth), and we want to follow the expectations regarding “good” data above, then we need to update it onto Platform B:

The following non-technical questions about Platform A’s phone numbers need to be answered before proceeding to update Platform B:

- How do we know the phone number is either “home” or “mobile” number?

- How do we know what is the base, and what is the area (should the phone number not be formatted correctly)?

- Do we only store one phone number in Platform B, even though it is capable of storing more?

The answers to these questions all involve trade-offs that cannot be answered by programmers alone. Thus, it can be a challenge to map data across systems, and often such trade-offs need careful consideration.

Aggregated data is useful for analytics, but its utility depends on the raw data being accurate

Aggregated data is a high-level overview or summary of the raw data, for example, breaking down a class by a count of males and females. A more complicated example of aggregation would not just be counting data points, but calculating averages in historical grade information.

A school can learn a lot about itself using aggregated data, however, doing so assumes that the raw data itself is all of the same quality and the data points are consistently populated.

Best Practices

Adhere to the single source of truth principle

In any given platform, there is a source and a destination (where data comes from and where it goes). For example, the learning management system may get its list of students from another database.

The single source of truth principle is often mistaken to mean that there should be only one source for all systems. In fact, the principle is about ensuring that there is only one location for updates of certain data points to occur.

If there is only one location for certain data points to be updated, and the rest of the data simply updates itself based on that source, either overnight or after a few hours, the result is that all of the data are now “in sync.”

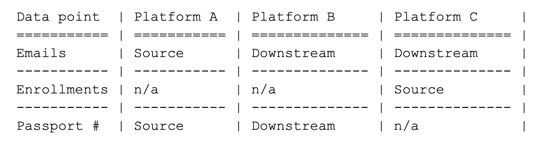

For example, the source for health information might be in Platform A, which is where it can be updated, while Platform B has read-only access to it. Meanwhile, Platform B can, additionally, be the single source of truth for classroom enrollments, which Platform C uses as read-only.

Upstream and downstream are components of the single source of truth principle

The above scenario has useful terminology to describe the single source of truth principle completely: “upstream” and “downstream.”

Data that is upstream relative to a platform means that there is some process needed to grab the latest information from that source. When the data is the “source” that indicates that data is considered to be correct.

In the scenario above, from the point of view of Platform B, the contact details (student’s parent’s email address) is “upstream” in Platform A, although Platform B itself is the source of truth for enrollments (students enrolled in classes).

The above also highlights how platforms can have different roles in the infrastructure, relying on other systems’ data being accurate and correct for some data points, but being the source of truths for others.

Identify threats to the single point of truth principle

In the course of the academic year, stakeholders might be tempted to grab instances of data to work from. This can break the data stream. For example, a teacher takes a photo of their timetable and keeps it on their desktop for easy reference. However, a change to the timetable occurs, and the teacher, instead of checking the system, assumes their screenshot is accurate.

People like to extract data into spreadsheets for their excellent data manipulation tools. Just like keeping our own notes for efficiency, spreadsheets can have a valuable role when a stakeholder needs to assess progress on a project or create a report. However, if that spreadsheet does not get updated from an upstream source, and yet is relied upon downstream, then the quality of the data suffers.

Breaking the single source of truth principle threatens trust

If data appears to be incorrect or not freshly updated in time, invariably, the system itself is blamed as being poor or inadequate. However, often the fault lies with information that has become dissociated from their source data, often via out-of-date spreadsheets or screenshots.

A community whose perception is that the information systems are not meeting the requirements adds frustration to the equation, which can further disrupt the problem-solving processes.

Workflows are a means to maintain quality data

When data is input into a system, it will eventually need to be utilised by a stakeholder. For example, a Parent may enter their child’s language skills as “intermediate” in Chinese, which will then need to be assessed by the language department for appropriate placement. Or perhaps the Parent enters an expected grade-level placement, and final grade placement would be subject to other factors, including date of birth and prior school history.

Workflows are particularly valuable regarding data that has been self-reported. They provide a mechanism for information to be verified, modified as necessary, or supplemented.

Conclusion

We have established what constitutes “good data” and robust data management practices. We have also considered common issues that may arise from breaking the “single source of truth” principle. It is important to remember that managing a data landscape as diverse and challenging as that required by a School community means a large investment in people.

People are the final consumers of the data and the system that is designed to manage it. Managing their expectations, their assumptions, and agreeing with them on a clear model for data management facilitates smooth processes.